Variational Fusion of Time-of-Flight and Stereo Data Using Edge Selective Joint Filtering

Baoliang Chen and Cheolkon Jung

Xidian University

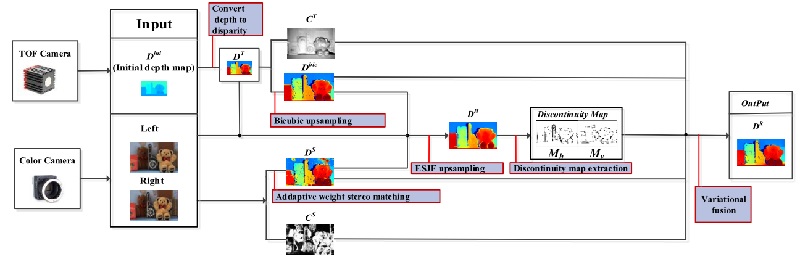

Fig. 1. Framework of the proposed method

Abstract

In this paper, we propose variational fusion of time-of-flight (TOF) and stereo data using edge selective joint filtering (ESJF). We utilize ESJF to up-sample low-resolution (LR) depth captured by TOF camera and produce high-resolution (HR) depth maps with accurate edge information. First, we measure confidence of two sensor with different reliability to fuse them. Then, we up-sample TOF depth map using ESJF to generate discontinuity maps and protect edges in depth. Finally, we perform variational fusion of TOF and stereo depth data based on total variation (TV) guided by discontinuity maps. Experimental results show that the proposed method successfully produces HR depth maps and outperforms the-state-of-the-art ones in preserving edges and removing noise.

Paper

DOI: https://doi.org/10.1109/ICIP.2017.8296519

Citation:

Baoliang Chen, Cheolkon Jung, and Zhendong Zhang, “Variational Fusion of Time-of-Flight and Stereo Data Using Edge Selective Joint Filtering,” in Proc. IEEE ICIP, pp. 1437-1441, 2017

Test Images and Executable Files:

Results:

Fig. 2. Experimental results in data set [1]. From left to right: Left color image, TOF result, Stereo result, Ground Truth and Proposed. Bottom: Giulio et al. [2], Yang et al. [3], Zhu et al. [4], DalMutto et al. [5], and DalMutto et al. [6] .

Reference

[1] Carlo Dal Mutto, Pietro Zanuttigh, and Guido Maria Cortelazzo, “Prob-abilistic tof and stereo data fusion based on mixed pixels measurement models,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 37, no. 11, pp. 2260–2272, 2015.

[2] Giulio Marin, Pietro Zanuttigh, and Stefano Mattoccia, “Reliable fusion of tof and stereo depth driven by confidence measures,”in Proc. European Conference on Computer Vision. Springer, 2016, pp. 386–401.

[3] Qingxiong Yang, Kar-Han Tan, Bruce Culbertson, and John Apostolopoulos, “Fusion of active and passive sensors for fast 3d capture,” in Proc. IEEE Workshop on Multimedia Signal Processing (MMSP). IEEE, 2010, pp. 69–74.

[4] Jiejie Zhu, Liang Wang, Jizhou Gao, and Ruigang Yang, “Spatial temporal fusion for high accuracy depth maps using dynamic mrfs,”IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 32, no. 5, pp. 899–909, 2010.

[5] Jiejie Zhu, Liang Wang, Ruigang Yang, and James Davis, “Fusion of time-of-flight depth and stereo for high accuracy depth maps,” in Proc. IEEE Conference on Computer Vision and Pattern Recognition. IEEE, 2008, pp. 1–8.

[6] C. D. Mutto, P. Zanuttigh, S. Mattoccia, and G. Cortelazzo, “Locally consistent tof and stereo data fusion,” in Proceedings of ECCV Workshop on Consumer Depth Cameras for Computer Vision, 2012, vol. 12, pp.598–607.

Acknowledgement

This work was supported by the National Natural Science Foundation of China (No. 61271298) and the International S&T Cooperation Program of China (No. 2014DFG12780)